Human brains make new nerve cells — and lots of them — well into old age

Your brain might make new nerve cells well into old age.

Healthy people in their 70s have just as many young nerve cells, or neurons, in a memory-related part of the brain as do teenagers and young adults, researchers report in the April 5 Cell Stem Cell. The discovery suggests that the hippocampus keeps generating new neurons throughout a person’s life.

The finding contradicts a study published in March, which suggested that neurogenesis in the hippocampus stops in childhood (SN Online: 3/8/18). But the new research fits with a larger pile of evidence showing that adult human brains can, to some extent, make new neurons. While those studies indicate that the process tapers off over time, the new study proposes almost no decline at all.

Understanding how healthy brains change over time is important for researchers untangling the ways that conditions like depression, stress and memory loss affect older brains.

When it comes to studying neurogenesis in humans, “the devil is in the details,” says Jonas Frisén, a neuroscientist at the Karolinska Institute in Stockholm who was not involved in the new research. Small differences in methodology — such as the way brains are preserved or how neurons are counted — can have a big impact on the results, which could explain the conflicting findings. The new paper “is the most rigorous study yet,” he says.

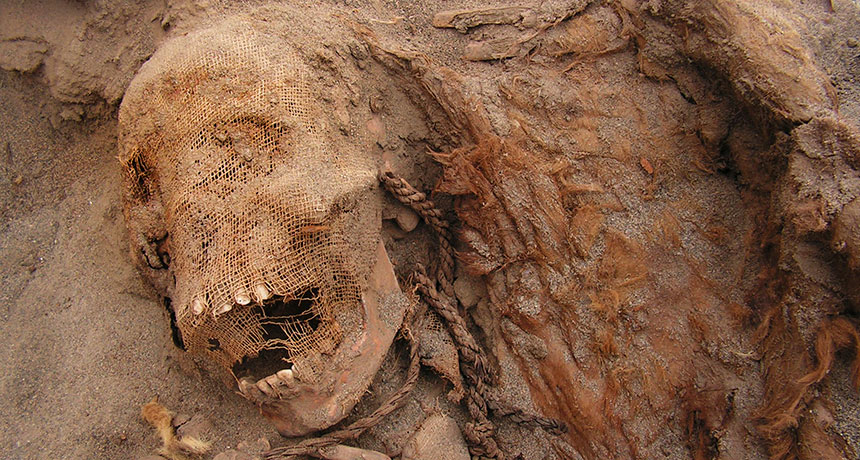

Researchers studied hippocampi from the autopsied brains of 17 men and 11 women ranging in age from 14 to 79. In contrast to past studies that have often relied on donations from patients without a detailed medical history, the researchers knew that none of the donors had a history of psychiatric illness or chronic illness. And none of the brains tested positive for drugs or alcohol, says Maura Boldrini, a psychiatrist at Columbia University. Boldrini and her colleagues also had access to whole hippocampi, rather than just a few slices, allowing the team to make more accurate estimates of the number of neurons, she says.

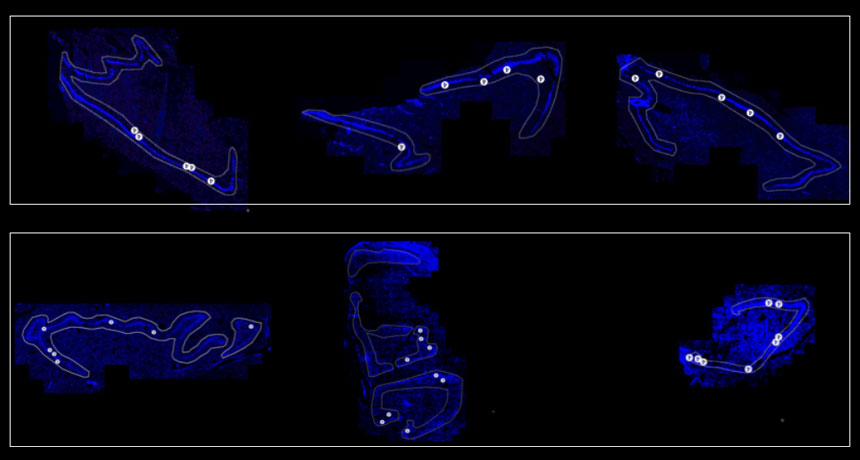

To look for signs of neurogenesis, the researchers hunted for specific proteins produced by neurons at particular stages of development. Proteins such as GFAP and SOX2, for example, are made in abundance by stem cells that eventually turn into neurons, while newborn neurons make more of proteins such as Ki-67. In all of the brains, the researchers found evidence of newborn neurons in the dentate gyrus, the part of the hippocampus where neurons are born.

Although the number of neural stem cells was a bit lower in people in their 70s compared with people in their 20s, the older brains still had thousands of these cells. The number of young neurons in intermediate to advanced stages of development was the same across people of all ages.

Still, the healthy older brains did show some signs of decline. Researchers found less evidence for the formation of new blood vessels and fewer protein markers that signal neuroplasticity, or the brain’s ability to make new connections between neurons. But it’s too soon to say what these findings mean for brain function, Boldrini says. Studies on autopsied brains can look at structure but not activity.

Not all neuroscientists are convinced by the findings. “We don’t think that what they are identifying as young neurons actually are,” says Arturo Alvarez-Buylla of the University of California, San Francisco, who coauthored the recent paper that found no signs of neurogenesis in adult brains. In his study, some of the cells his team initially flagged as young neurons turned out to be mature cells upon further investigation.

But others say the new findings are sound. “They use very sophisticated methodology,” Frisén says, and control for factors that Alvarez-Buylla’s study didn’t, such as the type of preservative used on the brains.