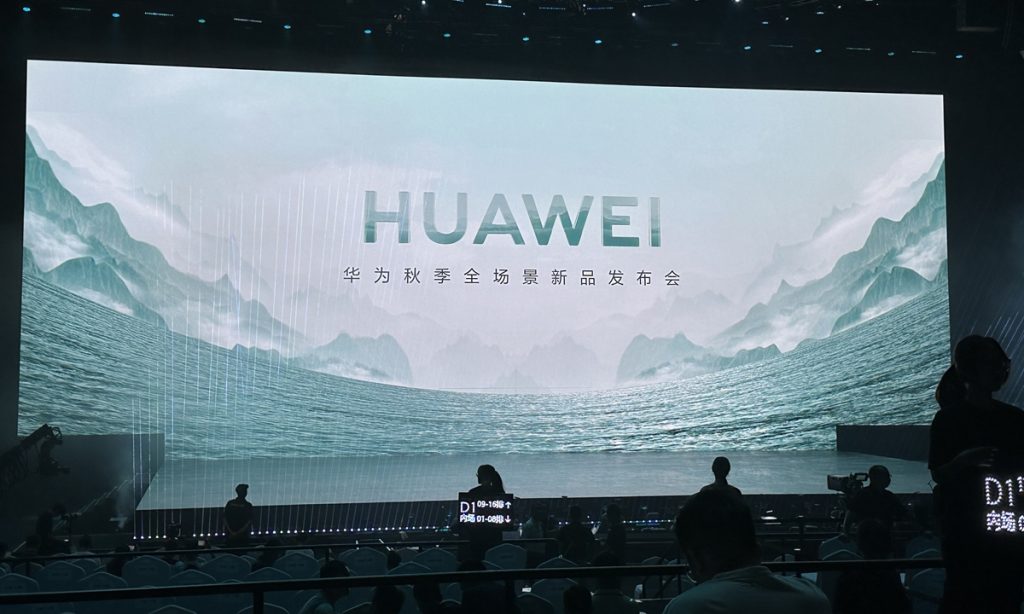

Huawei makes high-profile comeback with launch of all-scenario products amid reported chip breakthrough

Huawei launched new products ranging from smart screens, the MatePad and watches to the new Harmony OS NEXT system at a highly anticipated event in Shenzhen, South China's Guangdong Province on Monday, making a high-profile comeback to the market amid reported a chip breakthrough and years-long US sanctions.

While the event did not reveal the details of the closely watched Mate 60 series, consumers are still enthusiastic about the release, viewing it as a symbolic moment for the Chinese tech giant to regain lost ground in the consumer business and move forward to rival industry giants such as Apple.

The company flooded major Chinese social media platforms under hashtags such as #FarAhead, since the surprisingly presale of the Mate 60 series on August 29. The series has since become a big seller across the country.

The Monday launch event intensified the fanfare across the country, attracting live broadcasts and livestreaming by more than 100 media outlets. Audiences are also crying out "Far Ahead Rivals" in each product announcement on-site.

Yu Chengdong, CEO of the Huawei Consumer Business Group, said at the opening that the company is ramping up efforts and working overtime to produce its devices, fulfilling market demand for the newly released handsets. "Thanks to Chinese consumers' all-out support to Huawei," Yu said.

Previously, some anticipated a formal detailing of the phone's specs - in particular, the chipsets in the Mate 60 series - at the Monday event, which reportedly utilizes the Kirin 9000s chip, featuring either 7-nanometer (nm) or 5-nm process technology.

Huawei has kept tight-lipped about the capabilities of the chip. But industry analysts believe that the handset shows that the US-sanctioned tech giant has finally found a way through and will welcome its magnificent turnaround after years of hard struggles, especially in the high-end smartphone market.

Demand for the Mate 60 Pro has been strong since its surprising launch on August 29, and the shipment plan for the second half of 2023 has increased by about 20 percent to 5.5-6 million units. Based on this market trend, cumulative shipments of the Mate 60 Pro are expected to reach at least 12 million units within 12 months after the launch, Kuo Ming-Chi, an analyst at TF International Securities, said in a note.

Huawei's "undeniable influence" on industries and stock markets is coming back, Kuo said. He noted that Huawei's comeback is actually a good thing for consumers. It can force Apple to step out of its comfort zone and innovate more aggressively.

At a time when mobile phone consumption is relatively sluggish, the return of Huawei's high-end mobile phones is a landmark event for the bottoming out of the mobile phone consumer market, Adela Guo, a research director at IDC Asia Pacific, told the Global Times on Monday.

In the short term, it is expected to drive an upsurge in the domestic mobile phone consumer market. It will also make competition in the high-end mobile phone market more intense, Guo said.

Monday also marked two years since the return of Meng Wanzhou in 2021. Meng, now a rotating chairperson of Huawei, was arrested by Canadian authorities in December 2018 at the request of the US government.

Some netizens said a stronger Huawei returning to the center stage of global tech innovation may be considered as "a slap in the face" to the US government's ruthless suppression and attack on the leading Chinese tech company, especially as the planned event date marked second anniversary of Meng's safe return from Canada to China.

"Huawei's mobile phone business, which has been unreasonably suppressed for more than three years, has broken through major obstacles and been completely rebuilt. We can see that all the Huawei partners, Huawei fans and the media are quite excited with a festive atmosphere on scene," Jiang Junmu, a Shanghai-based veteran industry expert and close follower of Huawei, told the Global Times on Monday.

Huawei also unveiled the latest progress of its self-developed operating system HarmonyOS at the event, with the new release of Harmony OS NEXT.

"A coordination of software and hardware will help Huawei construct a more perfect, complete system that will help it return to the international market," Jiang said.

There are now more than 30 operating systems in China that are based on HarmonyOS open source, covering industry terminals, mobile phones, tablets and home terminals. In total, there are approximately 600 million users, ranking third in the world, Huawei founder Ren Zhengfei said in a recent interview with Liu Yadong, Dean of the School of Journalism and Communication at Nankai University.

Ren said that if China establishes its own standard system, it will definitely be better than that of the US.

Ren compared the system to "clothes," saying that the US clothes were mended again and again, and there were patches everywhere. But Huawei has made new "clothes" in recent years. "We will directly make standards better than those in the US. In addition to being used in China, it is used all over the world," Ren said.

Huawei may encounter more difficulties, but at the same time it becomes more prosperous, Ren said.